RDEL #114: Where do developers actually want AI to support their work?

Developers welcome AI for toil and boilerplate but demand human oversight for high-stakes decisions and resist automation in mentoring and strategic work

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

As AI coding assistants become ubiquitous in software development, engineering leaders face a critical challenge: where should these tools augment work, and where might they undermine the craft, relationships, and decision-making that define effective engineering? While AI promises efficiency gains, the gap between capability and meaningful integration grows wider each day. This week we ask: Where do developers actually want AI support in their daily work, and how can leaders deploy it responsibly?

The context

Generative AI tools re rapidly becoming standard in software development workflows. Yet despite widespread availability, adoption reveals an interesting pattern: developers use AI inconsistently across their work, often defaulting to it for familiar tasks while avoiding it for others. Engineering leaders lack clear guidance on which aspects of software work genuinely benefit from AI support versus which aspects suffer when AI is inserted into the workflow.

The challenge runs deeper than capability. Even when AI can technically perform a task, developers may resist using it—or use it in ways that create new problems. Some developers report that AI helps them stay in flow, while others worry it’s eroding their skills or pulling them away from work they find meaningful. Without understanding the psychological and professional considerations that shape these patterns, leaders risk deploying AI in ways that optimize the wrong things: boosting output while hollowing out expertise, automating tasks that should remain human, or missing opportunities to reduce genuine toil.

The research

Researchers from Oregon State University and Microsoft conducted a large-scale mixed-methods study of 860 software developers, examining how their cognitive appraisals of tasks (across dimensions of value, identity, accountability, and demands) predict their openness to and use of AI tools, and which Responsible AI principles they prioritize across different work contexts.

Key findings include:

Task appraisals strongly predict AI adoption patterns. Developers showed increased openness and usage for high-value tasks, high-demand work, and high-accountability situations. However, identity-aligned tasks showed a dual pattern: lower openness but higher usage, suggesting developers protect ownership while strategically using AI to refine their craft.

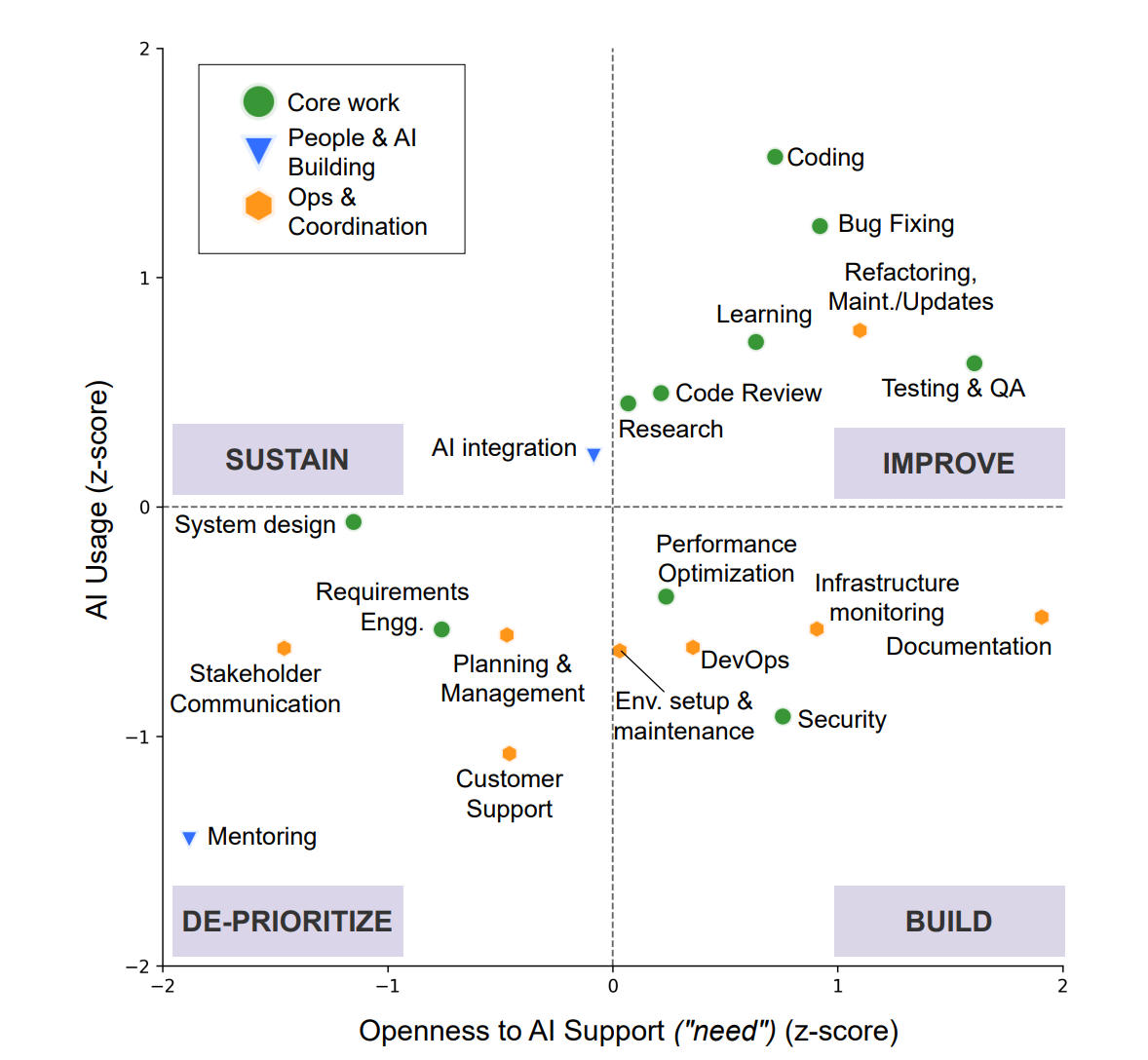

Three distinct task clusters emerged with different AI support patterns (interactive dashboard here)

Core work (coding, testing, debugging, code review) concentrated in “Build/Improve” zones with high AI demand

People and AI-building work (mentoring, AI integration) fell into “Sustain/De-prioritize” zones with low AI need due to identity and relational concerns

Ops and coordination work (DevOps, documentation, stakeholder communication) showed moderate demand but lagged adoption due to trust and capability gaps.

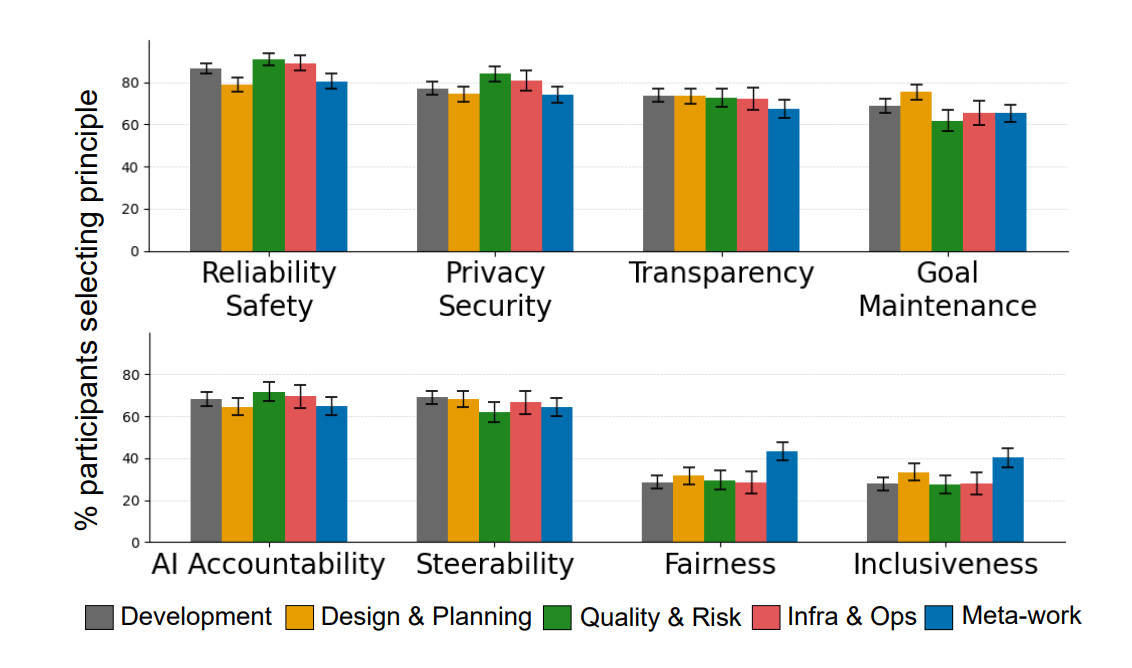

Responsible AI priorities vary dramatically by context. For systems-facing work, developers prioritized Reliability & Safety (95% selection rate) and Privacy & Security (89%) as non-negotiable prerequisites, followed by Transparency (84%), Goal Maintenance (82%), and Steerability (79%). For design and human-facing work, Fairness and Inclusiveness became 2-3x more salient, while Reliability requirements relaxed for creative scaffolding.

Developers want AI as a collaborator, not a replacement. Qualitative analysis revealed strong resistance to full automation. Developers welcomed AI to do more boiler-plate work to free focus for creative problem-solving, but insisted on retaining oversight and decision control in high-stakes, identity-laden work.

I can’t fully delegate the final code review to AI—my approval puts my name on it” (P117).

Experience and dispositions impact AI adoption. More experienced developers showed lower AI usage but higher demands for Reliability & Safety and Steerability. Risk-tolerant developers sought significantly more AI support for high-value and high-demand work, while technophilic individuals prioritized Goal Maintenance and used AI more actively under accountability pressure.

The application

The research reveals that meaningful AI integration requires matching tools to how developers actually experience their work. Developers welcome AI that reduces toil and amplifies core technical capabilities, but resist it in work that defines their professional identity or builds team relationships. The pattern to note is that AI works best as a cognitive collaborator for high-demand, low-identity tasks and as an augmentation tool for high-value core work.

To apply these findings, engineering leaders should consider the following:

Map your team’s work to identify where AI fits. Capture developers from developers on their current use cases of AI, and where they’d want to use AI more to reduce toil. Focus AI deployment on high-demand operational toil (documentation, environment setup, CI/CD maintenance) and augmentation of core technical work (coding, testing, debugging)—but deprioritize it for mentoring, strategic planning, or other places where human judgment and connection are irreplaceable.

Gate AI adoption on trust prerequisites before expanding scope. 95% of developers demand reliability and 89% require privacy/security for systems-facing work. Ensure current AI tools meet these baselines with transparency features, steerability controls, and accountability mechanisms like traces.

Design for augmentation, not automation, in core technical work. Implement AI with human oversight: suggest-only modes, reversible changes, and explicit approval checkpoints for coding, testing, and code review. This preserves developer agency, prevents deskilling, and maintains the quality judgment that defines effective engineering work.

—

Happy Research Tuesday,

Lizzie