RDEL #108: What distinguishes developers who successfully adopt AI coding tools from those who don't?

Developers who view AI as "collaborators" adopt tools successfully, while "feature" users are more likely to abandon them.

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership, and apply the latest research in the field to drive to an answer.

Generative AI tools are now widely available across engineering organizations, yet adoption remains uneven—even among developers on the same team. This week we ask: What distinguishes developers who successfully adopt AI coding tools from those who don't, and how can engineering leaders create conditions that support effective adoption across their teams?

The context

The software engineering industry has experienced a rapid influx of generative AI development tools, with many organizations investing heavily in these technologies based on projections of 20-55% productivity improvements. However, real-world results have been strikingly mixed. While some developers achieve the promised gains, others experience decreased efficiency or abandon the tools entirely after encountering challenges.

This uneven adoption creates significant organizational challenges. It hampers company-wide productivity efforts, frustrates management expectations around return on investment, and creates uncertainty about the evolving role of software developers. The puzzle becomes even more complex when considering that developers working on the same teams—with identical access to tools, shared codebases, and similar management expectations—often show dramatically different usage patterns. Understanding what drives these differences has become critical for organizations looking to realize the potential benefits of their AI tool investments.

The research

Researchers conducted semi-structured interviews with 54 software developers representing 27 matched pairs from different teams at a large multinational software company, using telemetry data to identify one frequent and one infrequent AI tool user per team. This paired design allowed researchers to isolate individual factors by controlling for team-level variables like codebase complexity, management culture, and organizational policies.

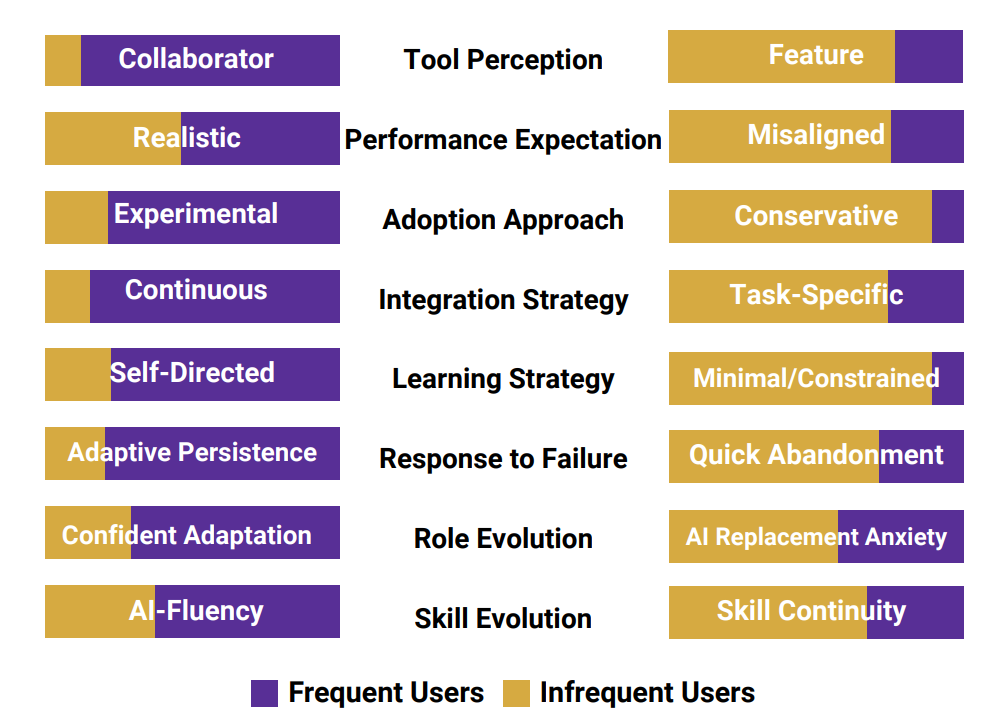

The study revealed several key findings about what distinguishes frequent from infrequent AI tool users:

Tool perception drives usage patterns: Frequent users viewed AI tools as "collaborative partners" or "teammates," while infrequent users saw them as enhanced features or utilities.

Response to failure determines long-term adoption: When encountering challenges, frequent users demonstrated "adaptive persistence," treating failures as puzzles to solve, while infrequent users more often quickly abandoned the tools. This difference in persistence appeared to be the critical factor separating successful from unsuccessful adoption.

Learning approaches varied significantly: Frequent users engaged in self-directed learning across multiple resources—blog posts, videos, peer examples—while infrequent users limited exploration to minimal, low-friction interactions within the tool interface itself.

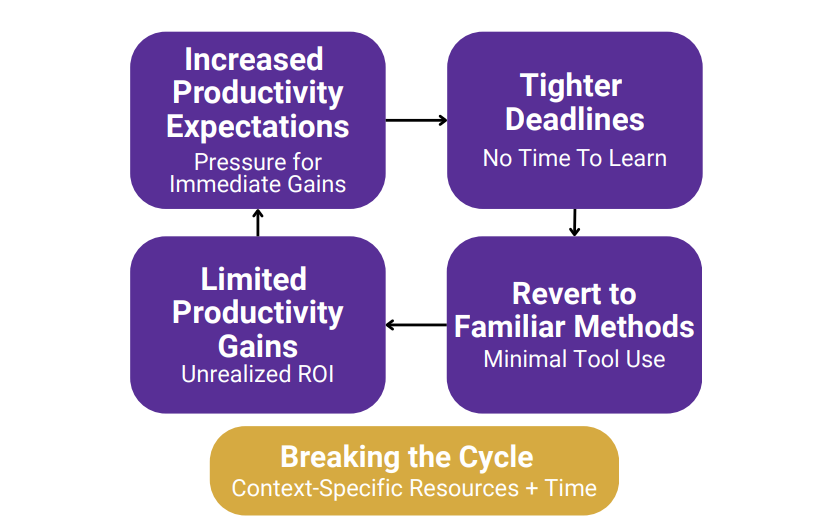

The "Productivity Pressure Paradox" emerged as a key organizational barrier: Increased productivity expectations from management without corresponding learning support created a counterproductive cycle where developers lacked time to develop the skills needed to achieve productivity gains, forcing them to revert to familiar methods under deadline pressure.

Team factors amplify individual differences: While individual mindsets drove adoption patterns, organizational factors like leadership messaging, context-specific guidance, and social learning structures could either reinforce success or exacerbate difficulties, but didn't uniformly affect all team members.

The application

The research reveals that successful AI tool adoption isn't simply about individual initiative—it requires a combination of the right individual mindset and supportive organizational conditions. The most critical insight is that expecting immediate productivity gains without investing in learning infrastructure creates a paradox that undermines the very benefits organizations seek.

Engineering leaders can apply these findings to improve AI tool adoption across their teams:

Reframe productivity expectations and provide protected learning time: Instead of expecting immediate gains, explicitly allocate time for experimentation and skill development. Consider implementing brief hackathons or making brief pauses in velocity expectations to break the productivity pressure paradox.

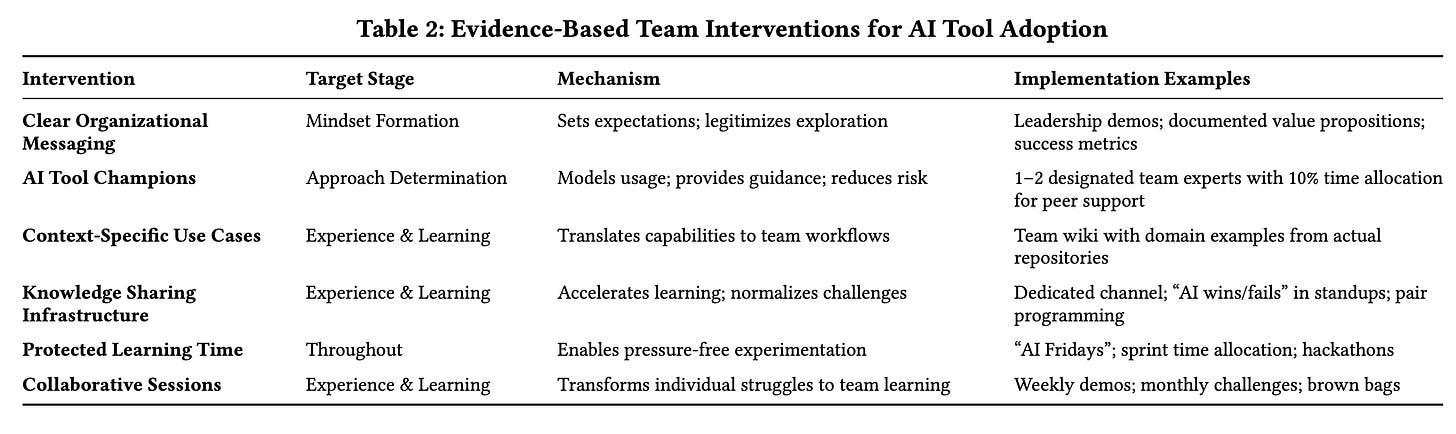

Invest in context-specific guidance rather than generic training: Developers need concrete examples of how to apply AI tools to their specific codebase and common tasks. Create team wikis with domain-specific examples, organize live demonstrations of AI tool usage on actual team projects, and establish internal champions who can model effective usage patterns.

Build social learning structures to amplify individual discoveries: Establish dedicated channels for sharing AI "wins and fails," incorporate AI usage discussions into stand ups, and create formal knowledge-sharing sessions. When teams have robust sharing practices, individual breakthroughs can create momentum for broader adoption rather than remaining isolated successes.

For even more inspiration, the researchers also shared a few evidence-based interventions for improving AI adoption (below).

—

Hopefully this gives you a few AI adoption strategies to share with your team. Happy Research Tuesday!

Lizzie