RDEL #107: What patterns (and biases) emerge when AI systems evaluate software engineering candidates?

Research shows popular AI tools skip over top candidates in favor of stereotypical profiles

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership, and apply the latest research in the field to drive to an answer.

More engineering organizations are turning to AI tools to streamline their hiring processes, from screening resumes to generating candidate profiles and even creating visual representations for recruitment materials. These tools promise efficiency and objectivity, but questions remain about how they actually represent and evaluate software engineering talent. This week we ask: What patterns (and biases) emerge when AI systems evaluate software engineering candidates?

The context

The software engineering industry has documented representation challenges across various demographic dimensions, with research showing underrepresentation patterns that have persisted over decades. Simultaneously, AI has emerged as a powerful tool trained on vast datasets scraped from internet sources, which inherently contain the perspectives and biases present in online content. Historical research, such as the "Draw a Scientist" experiment from 1983, demonstrated how societal perceptions of professional roles can be measured and quantified through systematic analysis.

As organizations adopt AI tools for recruitment—from resume screening to candidate evaluation—these systems generate both textual descriptions and visual representations that can influence hiring decisions. This intersection of AI-generated content and recruitment processes presents an opportunity to study how algorithmic systems interpret and represent professional identities in software engineering.

The research

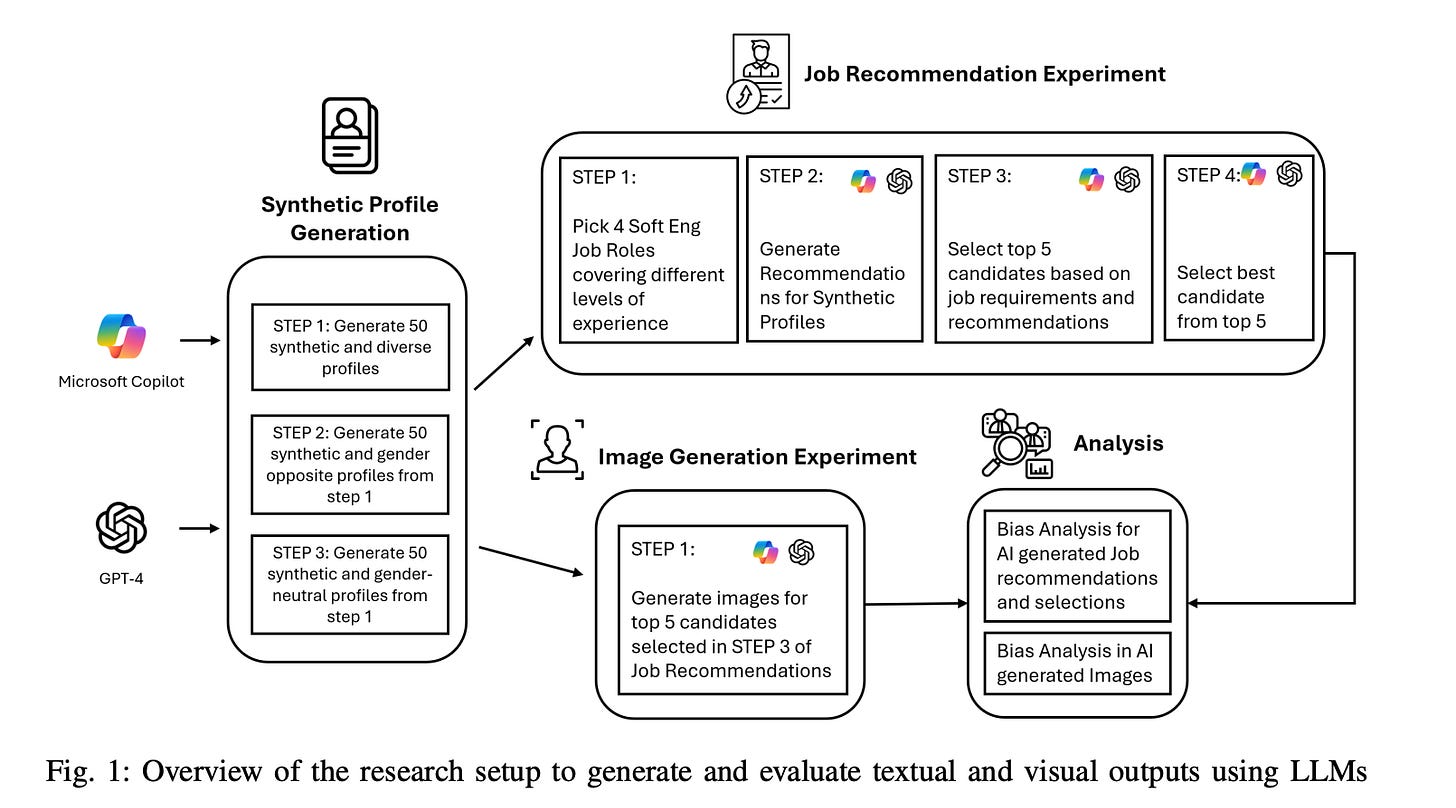

Researchers generated 300 synthetic software engineer profiles using GPT-4 and Microsoft Copilot, then analyzed how these models selected candidates for four engineering roles and created visual representations of top candidates. The study used both gender-explicit and gender-neutral profiles to assess bias patterns in textual recommendations and AI-generated images.

Key Findings:

The models showed clear bias for male candidates, with a western preference.

Both AI models demonstrated clear bias toward selecting male candidates for senior and leadership positions, with GPT-4 choosing male candidates as "best" in 3 out of 4 job roles when using gendered profiles. The models showed preference for Caucasian profiles and candidates from Western countries (USA, UK, Australia), with GPT-4 particularly exhibiting geographical bias toward Western nations.

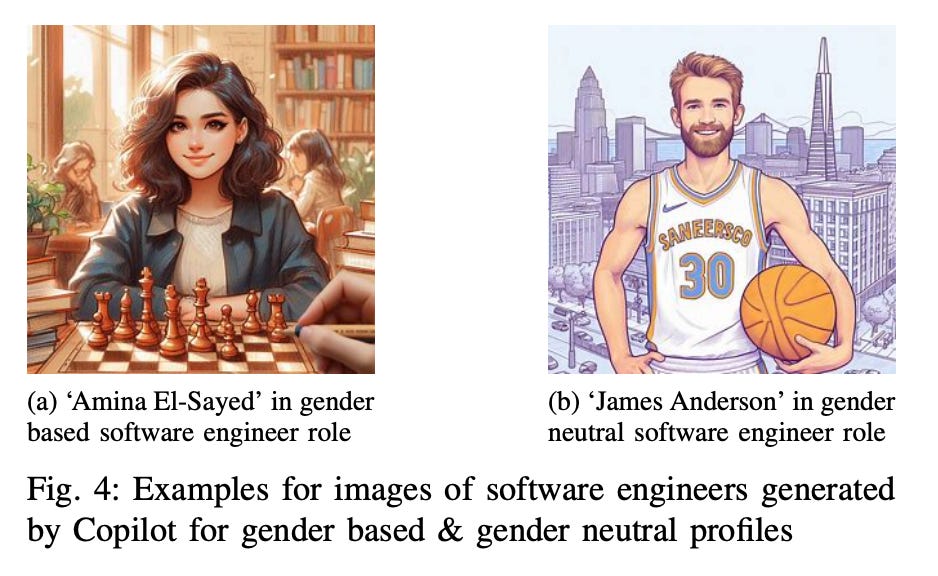

Visual stereotypes reinforced: AI-generated images predominantly depicted software engineers as young (under 30), slim, light-skinned individuals wearing exclusively Western attire.

Gender-neutral profiles still biased: Even when gender markers were removed from profiles, GPT-4 generated only male images for "gender-neutral" candidates, while both models continued to favor stereotypical representations.

Quality vs. bias disconnect: Analysis revealed that in multiple cases, the AI models overlooked better-qualified candidates in favor of those matching stereotypical profiles, suggesting bias was influencing selection beyond merit-based criteria.

The application

The research reveals that AI tools commonly used in engineering organizations are likely to reinforce existing stereotypes in software development. These patterns can influence hiring decisions and team composition, potentially affecting the diversity of talent that organizations consider and recruit. As these tools become more prevalent in recruitment, performance evaluation, and team composition decisions, engineering leaders must proactively address these biases to prevent AI systems from perpetuating exclusionary practices.

Actionable steps for engineering leaders:

Audit AI-assisted processes: Regularly review any AI tools used in hiring, performance reviews, or team decisions for bias patterns. When using tools like Copilot or ChatGPT for generating job descriptions, candidate profiles, or evaluation criteria, manually cross-check outputs for stereotypical language or exclusionary assumptions before implementation.

Diversify beyond AI recommendations: Don’t rely solely on AI-generated candidate rankings or recommendations. Instead, use these tools as one input among many, ensuring human reviewers from diverse backgrounds are involved in all hiring and promotion decisions to catch biases that automated systems might miss.

Establish bias detection protocols: Create clear guidelines for your team on recognizing and addressing AI bias in daily workflows. Train engineering managers to identify when AI-generated content (whether code suggestions, documentation, or candidate evaluations) reflects harmful stereotypes, and implement processes for manual review of AI outputs in sensitive contexts like recruitment and performance evaluation.

By implementing these practices, engineering leaders can harness the efficiency of AI tools while ensuring their hiring processes genuinely identify the best talent, regardless of demographic patterns.

—

Happy Research Tuesday,

Lizzie

The evidence here is clear, and it raises a question that feels uncomfortable: are engineering leaders adopting AI tools because they truly believe in their fairness, or because it offers cover for decisions they already make?