RDEL #87: How do AI coding tools actually change developer work?

A real-world trial of GitHub Copilot finds meaningful improvements in satisfaction and workflow, with no significant gains in output and trust.

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership, and apply the latest research in the field to drive to an answer.

Engineering leaders are flooded with headlines about AI coding tools like GitHub Copilot, but much of the research has focused on lab-based productivity metrics. What actually happens when developers use these tools in their real jobs, with all the constraints, ambiguity, and social context that entails? This week we ask: What actually changes when developers use generative AI coding tools in their day-to-day work?

The context

Generative AI coding tools like GitHub Copilot have promised a revolution in developer productivity, and early studies—mostly in lab environments—have reinforced that idea. But lab studies can only tell us so much. Real-world software development is shaped by legacy systems, cross-functional collaboration, and unpredictable day-to-day work. It’s a far cry from a controlled experiment focused on a single task.

To understand the true impact of AI tools, we need to go beyond technical performance and examine how they shape the human experience of engineering. Do they save time in complex environments? Do they create friction or foster flow? And importantly, how do they influence how developers think about their own value and future in a rapidly evolving landscape?

The research

Researchers from Microsoft and the Institute for Work Life ran a three-week randomized controlled trial of GitHub Copilot with 228 engineers at a large global software company. Engineers were randomly assigned to one of three groups: those newly given access to GitHub Copilot and instructed to use it (treatment), those asked not to use any AI tools (control), and those who were already using Copilot (continuing). Over three weeks, participants in all groups completed daily diary entries. Researchers also collected telemetry data to observe behavioral patterns alongside shifts in beliefs and attitudes.

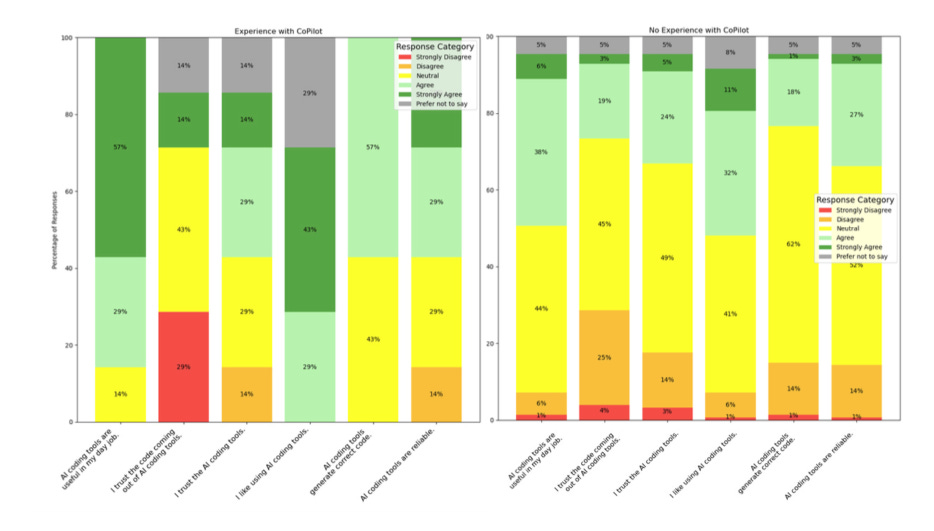

Before conducting the study, researchers collected initial perceptions about the tool and found the following key insights:

Prior experience shaped perceived usefulness and enjoyment

86% of users with prior experience agree that AI coding tools are useful, compared to only 44% of those without experience. 72% of those who had experience using Github Copilot reported they like using it, compared to only 43% of those who do not have experience.

Time for exploration impacted usage

The top reported reason for engineers who had not tried the tool yet was due to being too busy to explore the tool.

After the three week study, these were the top findings:

Perceived usefulness and enjoyment jumped, and new use cases emerged:

In the treatment group, average Likert scale (1-5) responses to “I like using AI coding tools” rose from 2.72 to 3.61, and “AI tools are useful” rose from 2.93 to 3.51.

Developers used Copilot not just for boilerplate code, but also as a replacement for web searches and a tool for ideation and design.

84% said Copilot positively changed how they worked:

Many reported spending less time on boring tasks, more time coding, and a greater sense of energy and excitement about their work.

66% of engineers reported a change in how they feel about their work after using these tools.

Telemetry data showed no measurable productivity gains: There were no statistically significant differences in lines of code, PRs, or time spent coding between the treatment and control groups.

Trust stayed flat: Despite enjoying the tools more, developers didn’t become more likely to say they trusted the code produced.

The application

This study suggests that the impact of AI coding tools extends beyond what telemetry alone can capture. According to the SPACE framework—which defines productivity across Satisfaction, Performance, Activity, Communication, and Efficiency—true impact includes how developers feel, how smoothly they work, and whether they’re focused on the right tasks. In this case, Copilot users reported feeling more energized, more effective, and more able to offload repetitive work.

What engineering leaders can do:

Broaden your metrics: Don’t just measure output—track satisfaction, flow, and perceived progress to understand AI’s full impact.

Create space for adoption: Many developers were too busy or uncertain to try Copilot. Carve out time and support for experimentation.

Position AI as a tool for better work: Encourage teams to use it for low-leverage tasks so they can focus on design, learning, and strategic thinking.

—

Generative AI’s real value may lie not in how much code it helps write—but in how it helps developers do their best work more often. Wishing you all a great week - happy Research Tuesday!

Lizzie

This is great! Keep em coming 🤓