RDEL #85: What is the impact of generative AI on software development?

Generative AI boosts productivity and satisfaction—but only if teams build trust, clarity, and strong delivery practices.

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

Generative AI is already changing how engineers work, from writing code to navigating meetings. But what happens when these tools move from individual experiments to broad organizational adoption? What trade-offs emerge when AI becomes a central part of how teams ship software? This week we dig into the DORA Research Group’s findings by asking: What is the impact of generative AI on software development?

The context

According to the 2024 DORA report, 76% of developers now use generative AI in some part of their daily work—and 89% of organizations are prioritizing its adoption. The early benefits are compelling: faster code generation, improved documentation quality, and tighter feedback loops. But as use becomes more widespread, the complexity grows.

As teams adopt these tools, the effects ripple far beyond individual productivity. AI is changing how developers collaborate, how code moves through the delivery pipeline, and how technical decisions are made. Speed gains in one area can create bottlenecks in another—especially if fundamentals like batch size, testing discipline, or team coordination don’t keep pace.

This shift raises a deeper question: Are our teams truly becoming more effective, or are we just working differently? The 2024 DORA research digs into that distinction, surfacing a more nuanced view of where generative AI helps—and where it introduces new risks.

Note: this builds on research released in the 2024 DORA report, which we previously covered.

The research

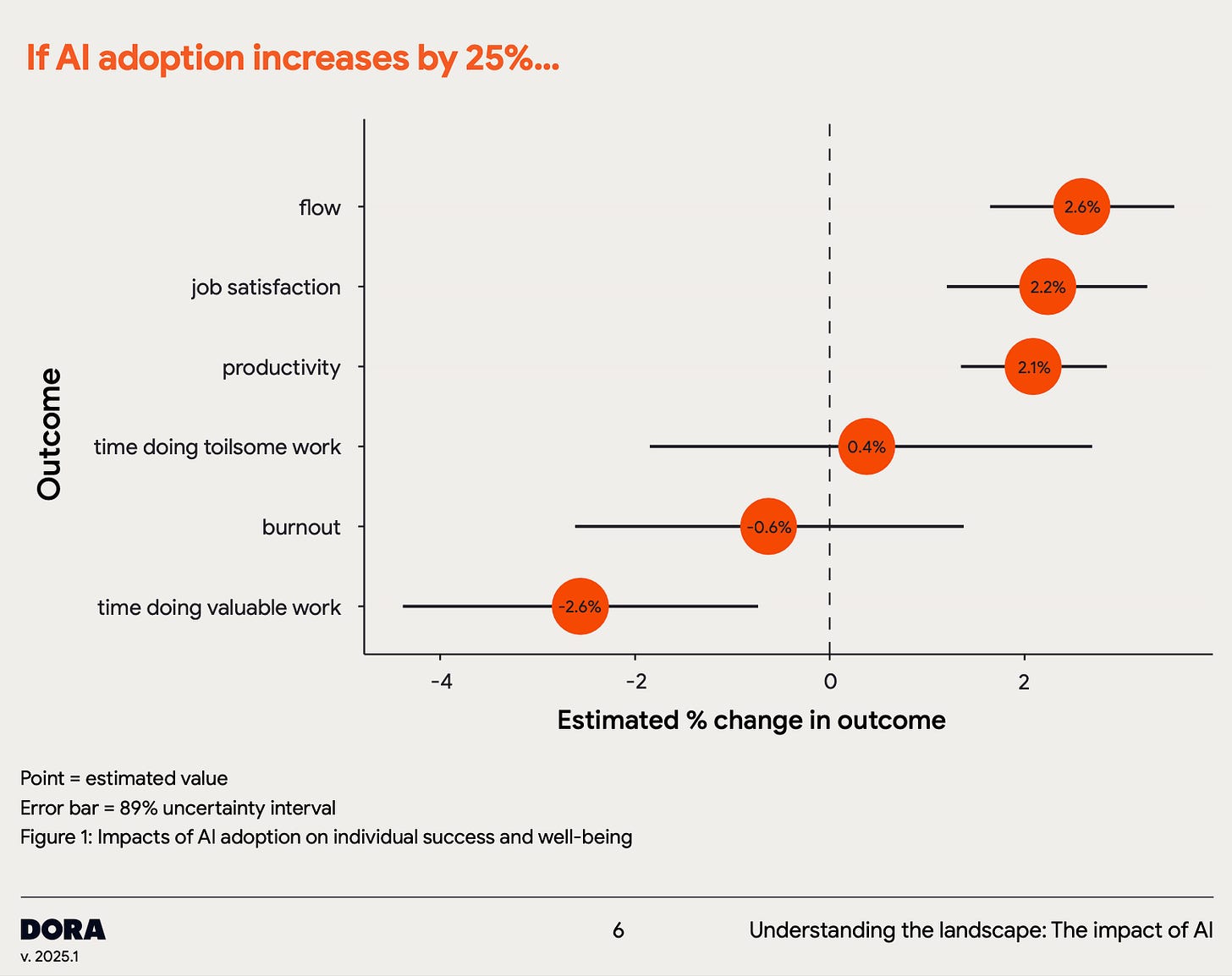

The DORA research team conducted a large-scale survey of over 36,000 software professionals, analyzing how varying levels of generative AI adoption correlated with key outcomes across individual, team, and organizational dimensions. They used statistical modeling to estimate the effects of a 25% increase in AI adoption on metrics such as productivity, code quality, delivery performance, and developer well-being.

The impact of GenAI

First, they modeled the impact of AI on developer well-being and success. The research showed a 25% increase in AI adoption yielded the greatest improvements in flow, job satisfaction, and productivity, and the greatest decrease in time spent doing valuable work.

Next, they looked at organizational outcomes on processes, codebases, and team coordination. The researchers found that AI adoption correlated with the highest increase in documentation quality, and highest decrease in code complexity.

Counterintuitively, researchers found that an increase AI adoption negatively impacts delivery stability. The researchers hypothesize this is due to the larger batch size of AI-assisted code, which makes it harder to code review.

Strategies for Measurement and Improvement

The research offers numerous strategies for demonstrating AI’s value, increasing genAI adoption, and measuring improvement.

The most impactful strategy to increase adoption of AI tools was to offer clear AI policies. Adopting clear AI policies helps reduce uncertainty and builds trust by giving developers confidence in when and how they can use generative AI responsibly. It also signals organizational support, which increases adoption—teams in the DORA study with clear AI policies saw up to 451% higher adoption rates compared to those without them.

To measure the impact of GenAI adoption, the DORA research group believes that capabilities predict performance, which predicts outcomes.

More specifically, they offer a series of metrics to consider when evaluating success (which should be regularly measured over time). These are a sample of the metrics:

Code assistant usage metrics (to indicate how adoption is progressing): licenses allocated, daily active usage, code suggestions accepted

Team level metrics: AI task reliance (“how much have you relied on AI for various tasks?”), AI interactions (“how frequently have you interacted with AI?”), AI trust, % of time in flow, job satisfaction, productivity, impact

Service-level metrics: code complexity, code quality, documentation quality, technical debt, code review time, product performance

Organizational metrics: organization performance, customer satisfaction, operating efficiency, operating quality

(Note: more metrics are available to review in the report — use a combination of metrics that best suit your organization)

The application

Generative AI has the potential to meaningfully improve both developer experience and team efficiency. The DORA findings show that AI adoption is associated with increases in flow, productivity, job satisfaction, and code quality—alongside faster reviews and better documentation. These are promising signals that show the impressive value of leveraging these tools.

But these benefits aren’t guaranteed. Without the right foundations—like clear usage guidelines, strong feedback loops, and disciplined delivery practices—teams risk introducing instability, bloated batch sizes, or a decline in perceived work value. The difference between meaningful acceleration and organizational drag comes down to how intentionally AI is integrated into the development process.

Here are ways that engineering teams can thoughtfully enact GenAI policies within their companies.

Reinforce delivery fundamentals.

AI can increase output, but without small batch sizes and robust testing, that output can become a liability. Anchor AI use in practices that protect quality and stability.Establish clear, empowering AI policies.

Policies that clarify where and how AI should be used reduce uncertainty and increase trust. Teams with defined policies saw 451% higher AI adoption in the DORA study.Invest in fast, high-quality feedback loops.

When AI moves faster than the systems around it, tight feedback to iterate and learn becomes essential. Strengthen test coverage, code reviews, and observability to ensure AI-generated changes are safe and valuable. Also, capture feedback from engineers regularly so you can adapt your implementation of AI.Give developers time and autonomy to learn.

The researchers found that individual reliance on AI peaks at around 15 to 20 months into using the tool. Support developers with space to experiment and iterate, so they can naturally grow in their usage and adoption.The research report includes additional recommendations on how to amplify developer value, foster trust, and build adoption. For more, check out the report directly.

As adoption accelerates, thoughtful execution—not just tooling—will determine how much value engineering teams can get from generative AI tools.

—

We hope this RDEL helps you get the most from your AI implementation. As always, happy Research Tuesday!

Lizzie