RDEL #80: What makes a code review useful?

Larger reviews tend to have a lower proportion of useful comments, stronger team cultures have higher-quality reviews, and more.

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

Code reviews are a cornerstone of software development for improving quality, knowledge sharing, and maintainability. But not all code review comments are equally useful. Some comments offer meaningful value, while others create noise, slow down development, and can lead to software delays. This week we ask: What makes a code review useful?

The context

For most teams, code reviews serve two critical roles in software engineering: quality control and knowledge sharing. They help catch defects, ensure adherence to team standards, and improve maintainability while also fostering learning, spreading expertise, and aligning teams on best practices. Developers dedicate an average of six hours per week to reviewing code or preparing their own for review.

In an era of AI-assisted coding and large language models (LLMs), code reviews are more essential than ever. While AI can generate code quickly, it currently lacks the judgment to ensure full context, maintainability, and alignment with business goals. Code reviews act as a safeguard, ensuring that both human- and AI-written code meet the highest standards while reinforcing shared team knowledge. However, not all reviews are equally effective—understanding what makes feedback useful can help teams refine their process and maximize the impact of every review.

The research

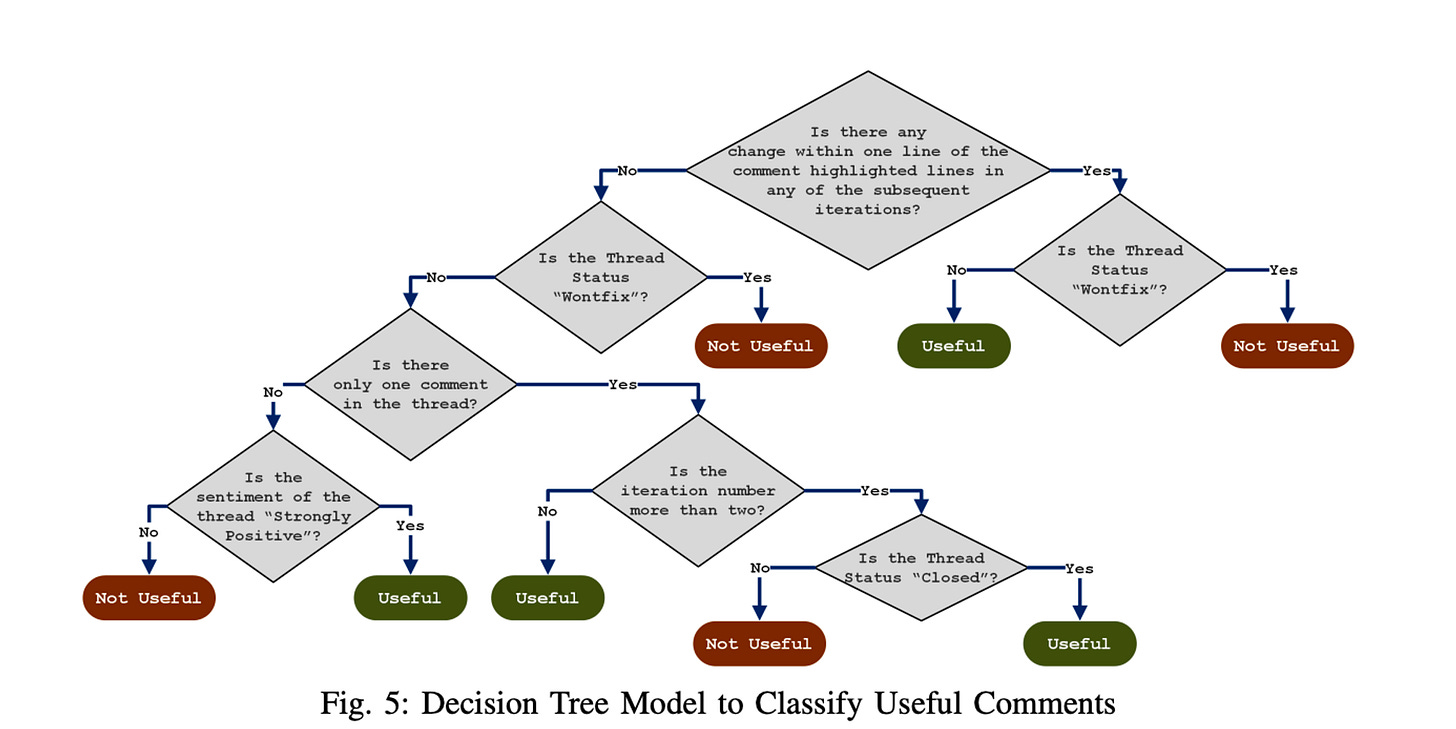

Researchers conducted a large-scale study analyzing 1.5 million code review comments across five major projects. They started by interviewing developers to understand what they considered valuable feedback. Using these insights, they built an AI-based classifier to distinguish useful from non-useful review comments. Finally, they applied this classifier to a massive dataset to uncover patterns and best practices for writing effective code reviews.

Researchers discovered a few attributes of comments that determine how useful code reviews are: thread status, number of participants, number of comments, reply from author, number of iterations, whether a comment triggers change, specific keywords, and sentiment.

The specific details they noticed are:

Experience matters: Reviewers who had previously worked on a file provided more useful feedback, with their comments being rated 74% useful compared to 60% useful for first-time reviewers.

Comment density drops in large reviews: The more files in a change, the lower the proportion of useful comments—suggesting that small, focused changes improve review quality.

New hires show an improvement curve: Reviewers' usefulness improves dramatically in their first year but plateaus afterward.

Certain types of comments are more useful: Comments identifying functional defects or validation gaps were rated over 80% useful, while vague questions and generic praise were deemed useful less than 50% of the time.

Psychological safety improves effectiveness: Developers were more likely to leave critical and useful feedback in teams with open, supportive cultures.

The application

Code reviews are still one of the most powerful tools in software engineering, serving as both a quality control mechanism and a knowledge-sharing process. This study highlights key factors that influence review usefulness, from reviewer experience to the size of code changes and the role of psychological safety. Investing in better code review practices doesn’t just improve individual pull requests—it enhances the long-term health, maintainability, and knowledge depth of an engineering organization.

Some specific steps to improve the effectiveness of code reviews include:

Select reviewers strategically: Assign at least one experienced reviewer who has previously worked on the file to ensure higher-quality feedback. Over time, make sure knowledge is shared across the team to reduce the risk of having bottlenecks in the review process from limited context.

Encourage small, focused changes: Large pull requests see a lower proportion of useful comments, and are more difficult to review and revert later. Encourage incremental submissions.

Build a culture that welcomes candor: Invest in team practices that allow the team to feel comfortable sharing constructive criticism with their peers. This will improve product quality, and also build a more resilient and performant team culture.

By implementing these strategies, engineering leaders can ensure that code reviews drive meaningful improvements without wasting developer time.

—

Happy Research Tuesday,

Lizzie

I'm curious and concerned about how this all changes with the rise of AI code review tools. I worry that we'll lose more of the benefits of quality code reviews. If the AI tool is already performing the review than maybe the rest of the team doesn't feel obligated to. Now we lose that insight from those more experienced and the learning and communicating now won't happen because no one else is looking at the PR.

Very timely read for our team. We’re bringing in more code contributors and fine-tuning our PR review process, so this has some really useful takeaways. Appreciate the insights—thanks!