RDEL #38: Does AI help or hurt gender diversity in tech hiring?

This week we review how AI recruiting tools impact the diversity of candidates that move through the pipeline

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership, and apply the latest research in the field to drive to an answer.

AI has become a powerful tool in the recruitment process, drastically changing how companies review and vet candidates. It’s also being applied to the tech industry, which has an already-present diversity issue. This week, we ask: does AI help or hurt gender diversity in tech hiring?

The context

Diversity has long been an issue within the tech industry. For example, women hold only 26.7% of tech positions. This is also pervasive in racial diversity, with white American’s holding 62.5% of positions in the tech sector while black Americans hold 7%, Asian Americans hold 20%, and LatinX Americans hold 8%. This creates concerns for many reasons, from economic impact to the idea that global technology products are being built by teams that only represent a portion of its users.

Improvements in the hiring process have been seen as a potential solution to the diversity problem. The rapid incorporation of AI in recruitment processes has significantly altered traditional hiring practices - from sourcing candidates to aggregating interview reports. Until recently, limited data has shown whether using AI in the recruitment process has improved gender diversity. This week, we dig into the research.

The research

Researchers conducted two experiments to determine whether AI had positive or negative impacts on the tech hiring process.

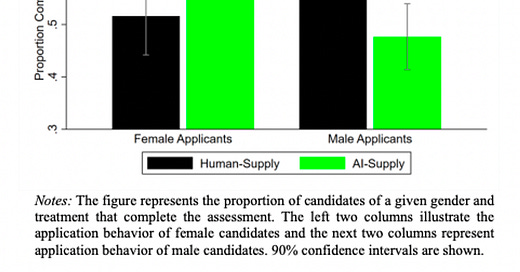

In the first experiment, researchers posted a job advertisement for a web developer position and then randomly assigned interested candidates to be evaluated by AI or a human (while informing the applicant). The objective was to determine how informing job applicants that their applications would be evaluated by AI, as opposed to human recruiters, affects the gender distribution of applicants completing the application process.

Researchers found that:

The introduction of AI in the evaluation process significantly increased the completion rate of job applications among women. Conversely, the study found a decrease in the application completion rate among men when AI was introduced as the evaluator.

The change in gender distribution of applicants completing the process did not compromise the overall quality of applicants.

In the second experiment, professional evaluators from the tech sector were recruited to assess these applicants' suitability for the web developer position. Evaluators either received candidates with names but no AI scores, names with AI scores, or no names and no scores. The objective was to explore how providing employers (evaluators) with AI-generated scores affects their assessment of male and female candidates.

Researchers found that:

When evaluators assessed candidates without AI-generated scores and with knowledge of candidates' names, female candidates were rated lower than male candidates.

Providing evaluators with AI-generated scores alongside applications significantly reduced the gender bias observed in human evaluations. Both male and female candidates were assessed more equally when AI scores were available.

In the no-name treatment, where evaluators did not have information on the candidates' gender, there was no significant difference in scores between male and female candidates.

It’s worth noting the common concern that if AI tools are trained with biased data, they may promote biased perspectives. The researchers estimated how the results would change if AI tools had increasing levels of bias (10%, 25%, 50%, and 100%). What they found was that in almost all cases, even a more biased model would perform better than without AI.

The application

The data offers a promising picture of how AI could help mitigate bias issues in hiring for the tech industry. Even with potential challenges of bias in an AI tool, the existing state-of-the-art AI tools for recruiting outperform the human bias that inherently exists in the hiring process. This represents a huge opportunity to enable a broader diversity of highly-qualified tech employees who traditionally were rejected on a basis of perception, not skill.

For teams who are considering introducing AI tools in their internal processes, this paper should be a promising sign that AI can be used to help create a more diverse, highly-qualified hiring pipeline. This doesn’t mean taking humans out of the process, but rather finding ways to introduce automation where possible to mitigate risk of bias (ie sourcing, initial screening).

Acknowledging and resolving bias on the team is still a critical part of having a robust hiring process, but introducing technology to reduce those opportunities for bias in the process is a great, high-impact way to make progress.

—

Happy Research Monday,

Lizzie

From the Quotient team