RDEL #16: What makes a code review useful?

We look at the characteristics of a useful code review comment and how to improve code review culture.

👋 Hello and welcome to RDEL - each week, we pose an interesting topic in engineering leadership, and apply the latest research in the field to drive to an answer. It’s Halloween week, the weather is turning, and I hope everyone is enjoying Harry Potter reruns. 🪄

This week, we dive into the characteristics of code reviews. On any team writing collaborative software, a significant amount of time is spent on code reviews. With all the time spent reviewing, commenting on, and approving code, a team naturally wants to make that time well spent. With that in mind, we ask: what makes a code review useful?

The context:

There are a number of goals for a code review - to ensure that a change is free of defects, solves the problem at hand in a reasonable fashion, and offers a learning opportunity to share how implementation was done. The main building blocks of reviews are the comments, where engineers write feedback, concerns, or questions to address. The quality of a comment can vary tremendously - good comments can improve a code author’s implementation and develop skills, while bad comments can waste time or create friction in the team’s collaboration processes.

Knowing what distinguishes a useful code review can help engineering teams improve communication, make great use of the code review process, and improve code review velocity.

The research:

Researchers at Microsoft reviewed the characteristics of useful code reviews using a three-part study. They first interviewed seven engineers across different teams to understand their perception of useful code reviews, then built an automated classifier to distinguish useful vs not useful comments, and finally applied it to 1.5 million code review comments at Microsoft to see how useful their reviews were..

From manual classification based on developer survey insights, researchers found a few attributes of comments that determine how useful they are: thread status, number of participants, number of comments, reply from author, number of iterations, whether a comment triggers change, specific keywords, and sentiment.

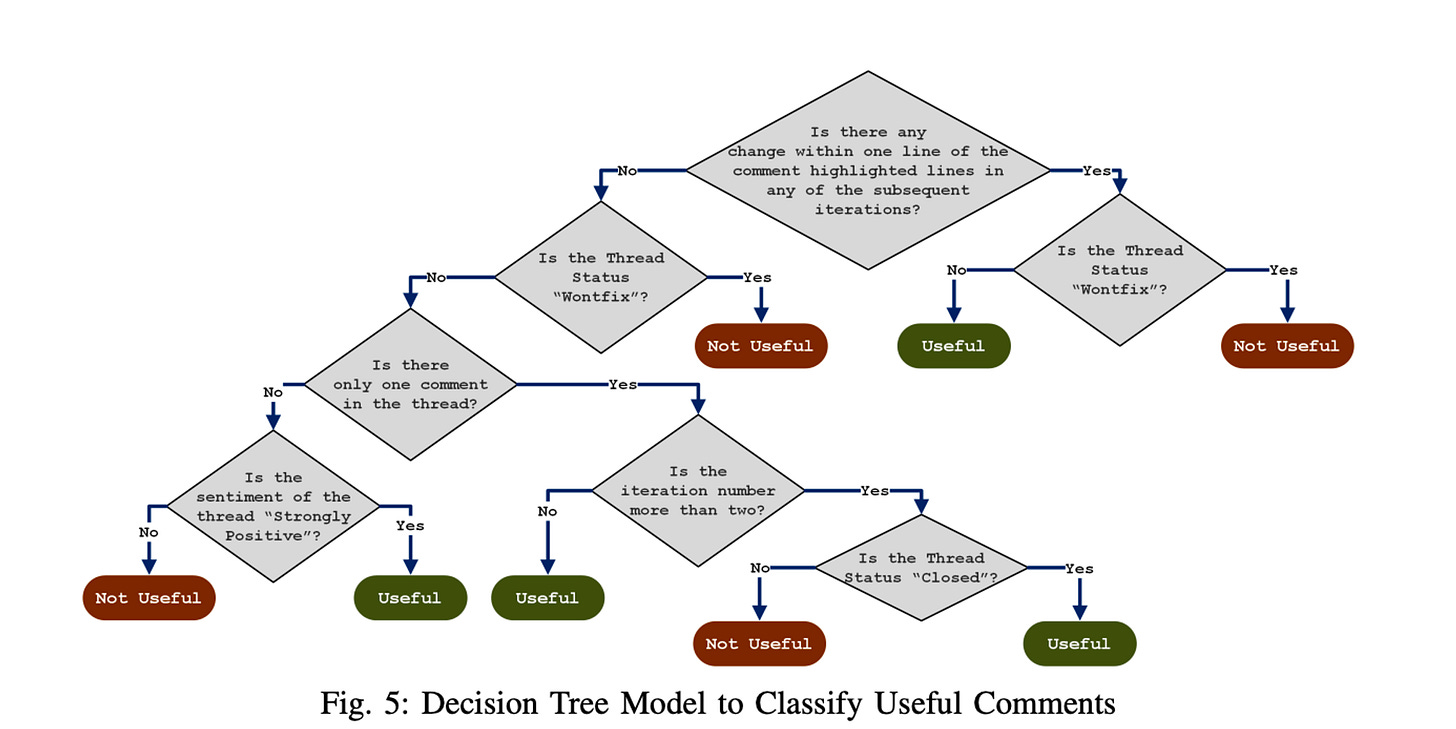

This is the classifier they built:

Researchers found a number of unique insights when applying their model to reviews made at Microsoft:

If a comment triggered changes within one line of where the comment was posted, it was very likely to be a useful comment.

Surprisingly, when an author doesn’t participate in a comment thread, it is more likely to be useful (88% chance) than a comment thread where they do participate (49%). Researchers believe this is because a non-response often indicates an implicit acknowledgement.

Comments with an “extremely negative tone” were less likely to be useful, even though 51% of the comments analyzed were classified as such.

Comment threads with one comment or participant were significantly more useful (88%) than more than one comment or participant (51%). Comments made after the first two iterations are less likely to be useful.

Reviewers who previously reviewed or changed a file were more likely to give a useful comment on that file.

The larger the review (based on lines or files), the less useful the review comments were. Researchers believe that it is because at larger file sizes, reviewers are more likely to give a cursory glance and miss something.

The application:

This paper does a great job of offering actionable insights for teams to increase the quality of their code reviews.

When a comment thread has more than two iterations, it’s time for a conversation. When a reviewer and author aren’t on the same page within two iterations, this signals the need for a synchronous communication. It will give the commenters the opportunity to establish more context, walk through some suggestions, and prevent the review from stalling.

Keep changelists small enough to make thorough reviews possible. When they get too big, reviewers won’t be able to cover as much depth and can miss important nuances that have a big impact on the code.

Be mindful of tone. Reviews should be strictly about the code, not the author. Anytime a comment takes on too negative of the tone, it increases the risk of making an author feel hurt or upset. If a reviewer feels they cannot deliver a piece of feedback without coming off as too negative, they should instead sync up with the author to discuss feedback together.

—

I hope these insights give you some opportunities to improve the usefulness of your code reviews. Wishing you all a happy Research Monday!

Lizzie

From the Quotient team