RDEL #128: How should product managers decide which tasks to delegate to AI?

62% of PMs use AI daily, but 47% cite quality concerns—and accountability remains firmly human

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

As generative AI tools become ubiquitous in software development, engineering leaders are helping their teams integrate these tools effectively without losing the human judgment that makes great products. Product Managers, who bridge business strategy and technical execution, are also navigating this shift in real-time—but their experiences haven’t been well-documented. This week we ask: What values guide product managers when deciding which tasks to delegate to generative AI, and how is this reshaping their role?

The context

While research and tooling have focused heavily on how engineers use AI for coding tasks, Product Managers occupy a unique position that has received far less attention. PMs translate business needs into product requirements, communicate with stakeholders, write specifications, and make strategic decisions—all activities where AI could potentially help, but where the stakes of delegation are high.

Unlike developers who have clear use cases for AI, PMs face ambiguous choices. When a PM delegates writing a product specification to AI, who is accountable if it misses critical requirements? When they use AI to summarize user research, does it diminish their connection to customer needs? These aren’t just productivity questions—they’re questions about professional identity, accountability, and the future of knowledge work. Understanding how PMs navigate these decisions is crucial for engineering leaders managing cross-functional teams.

The research

Researchers at Microsoft conducted a large-scale mixed-methods study surveying 885 Product Managers, analyzing telemetry data from 731 PMs, and conducting in-depth interviews with 15 PMs to understand their GenAI adoption patterns, delegation decisions, and evolving practices.

Key findings include:

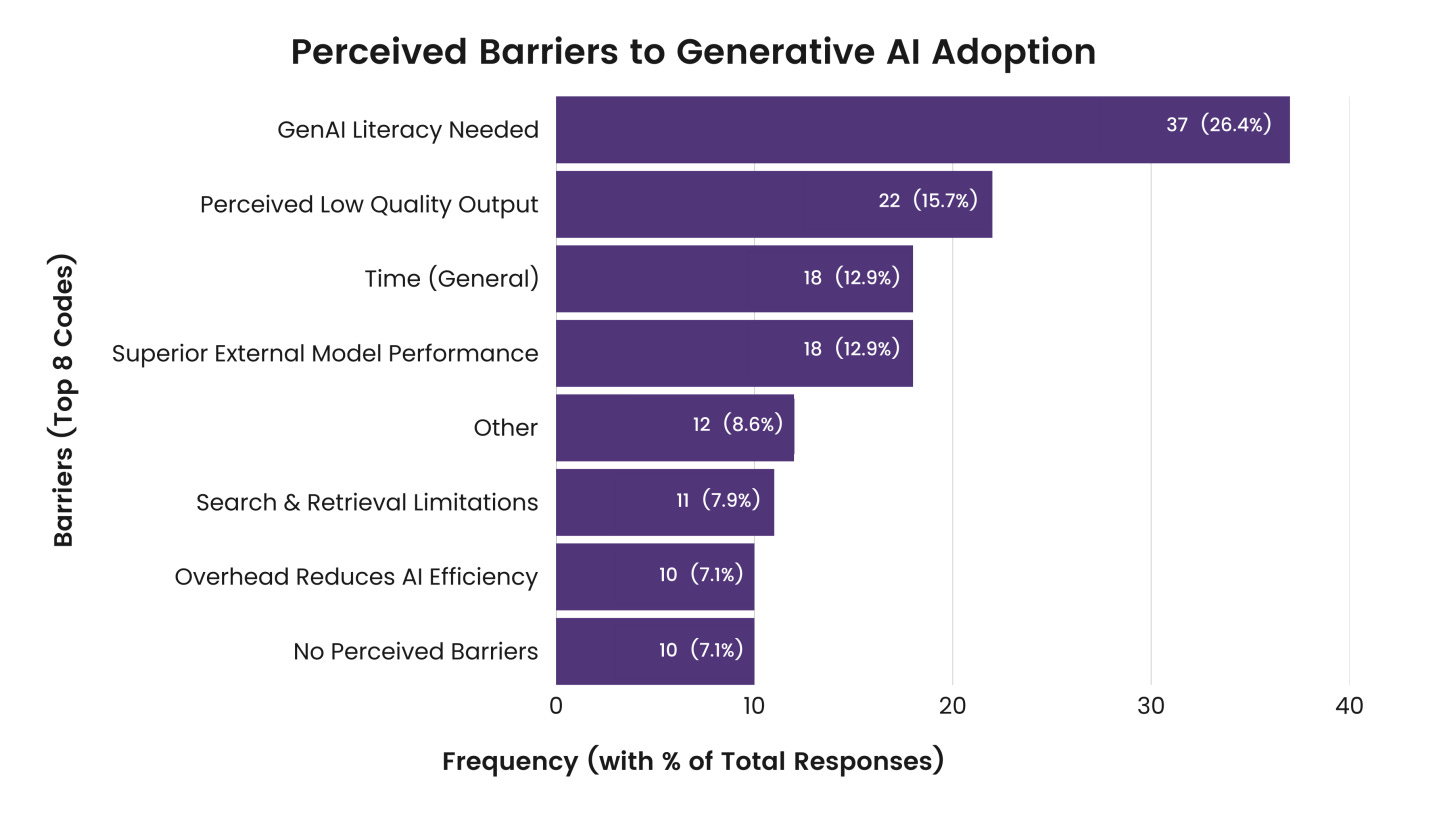

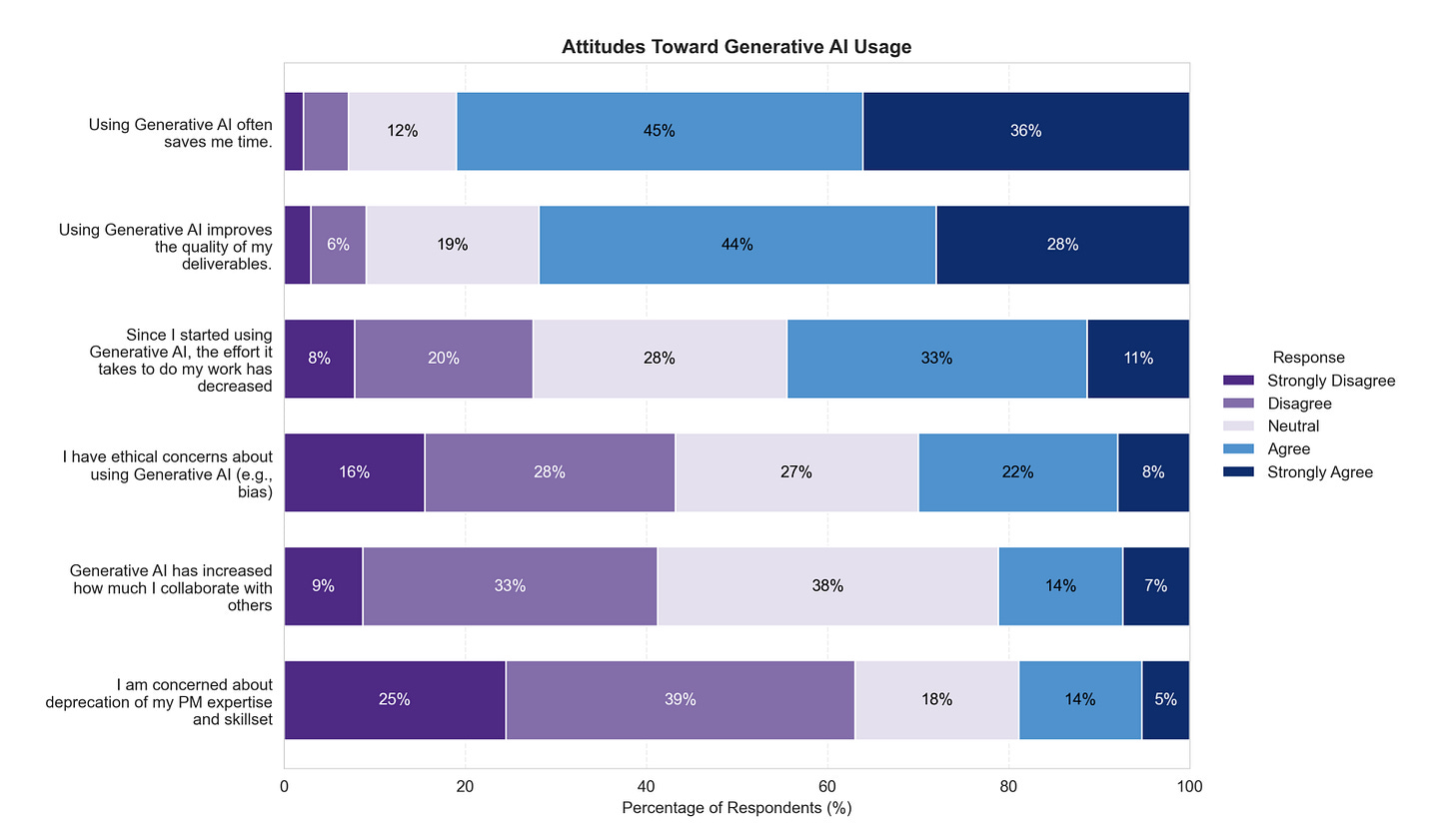

High adoption with quality concerns: 62% of PMs reported using GenAI daily or almost daily, but 47% cited concerns about output quality or inaccuracies as a barrier. While 81% agreed that “Using GenAI often saves me time,” the effort required to do their work hasn’t decreased for 56% of respondents, suggesting significant overhead in validating and refining AI outputs.

Identity-driven delegation decisions: PMs were reluctant to delegate tasks central to their professional identity. Tasks like formatting or meeting summaries were easily delegated, but core PM work like writing specifications or journey maps triggered resistance.

Accountability cannot be transferred: PMs consistently emphasized that accountability must remain with humans, even when using AI.

“Accountability must not be delegated to non-human actors.”

- Study participant

Blurring of PM-SWE boundaries: 12% of PMs reported using GenAI for prototyping and coding—traditionally SWE tasks. PMs described “vibe coding” to strengthen their PM skills and feeling empowered to take a “more front-line role working with data and building prototypes,” suggesting a significant shift in role boundaries.

Learning through experimentation: Self-directed experimentation was the primary method for developing GenAI delegation skills, rather than formal training. However, lack of PM-specific GenAI literacy was cited as the biggest barrier, with PMs requesting “clear examples of what I can use GenAI for and how...to the implementation I have of the PM role.”

The application

The research reveals that successful AI integration isn’t just about providing tools—it’s about helping teams develop judgment about when and how to delegate. PMs are making these decisions based on professional identity, accountability, and social dynamics at individual, team, and organizational levels, not just productivity gains.

Engineering leaders can apply these findings by:

Create space for experimentation without mandating outcomes: Rather than pushing adoption metrics, encourage PMs to experiment with AI on low-stakes tasks first. Set up “AI office hours” where team members can share what worked and what didn’t, normalizing both successes and failures.

Clarify accountability frameworks before scaling AI use: Have explicit conversations about who is accountable when AI is involved in deliverables. Help PMs understand that using AI doesn’t transfer accountability—they remain responsible for validating outputs and ensuring quality.

Invest in role-specific AI literacy, not just general training: Generic AI training isn’t enough. Work with your PMs to document PM-specific use cases, prompting techniques, and quality validation approaches. Consider having senior PMs create a playbook of effective and ineffective AI delegation patterns specific to your organization’s PM responsibilities.

—

Happy Research Tuesday!

Lizzie

The identity-driven delegation finding is spot-on. What stands out is the paradox where 81% say AI saves time, yet 56% report effort hasn't decreased. That overhead of validating AI outputs might actually be teaching PMs something valuable about their own decisionmaking criteria they hadn't articulated before.

Know any tools/startups that promise to handle a significant portion of PM tasks with AI (like Cursor has for software development)? I'm not familiar with any.