RDEL #127: How is Google using AI for internal code migrations?

80% of migration code was fully AI-authored, but success required sophisticated file discovery, multi-stage validation, and human review processes.

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

Code migrations are often a dreaded task in software engineering—updating thousands of files to adopt new frameworks or fix accumulated technical debt. At enterprise scale, these can require hundreds of engineering years, often stalling indefinitely. This week we ask: how is Google leveraging AI for internal code migrations?

The context

Large software organizations face a constant challenge: maintaining massive, mature codebases while keeping up with evolving frameworks, libraries, and business demands. In companies with 20+ year old codebases containing hundreds of millions of lines of code, technical debt accumulates in predictable ways. Legacy test frameworks persist, deprecated libraries remain in use, and experimental flags become permanent fixtures. While these aging patterns don’t immediately break functionality, they slow development velocity, confuse new engineers, and create barriers to adopting modern practices.

Traditional AST-based (abstract syntax tree) migrations work for straightforward transformations but struggle with contextual nuances and edge cases. The result? Migrations that would take hundreds of engineering years get perpetually delayed, or organizations simply accept the technical debt as a permanent cost of doing business. The emergence of large language models presents a new opportunity—but whether they can actually accelerate these complex, business-critical migrations at enterprise scale remains an open question.

The research

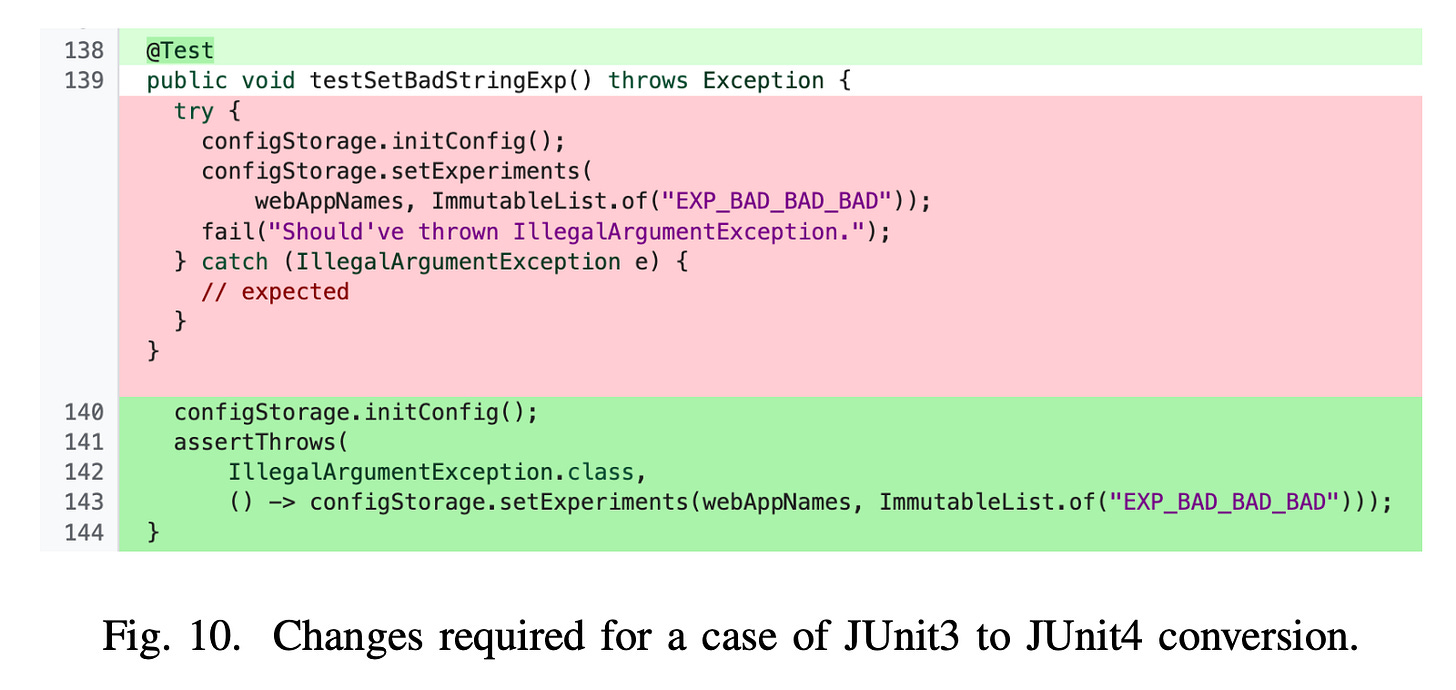

This experience report from Google examines LLM-based approaches across four major code migrations, measuring success by whether AI achieved at least 50% acceleration in end-to-end task completion. The study covers migrations from converting 32-bit IDs to 64-bit in Google Ads’ 500+ million line codebase to modernizing thousands of JUnit3 test files.

Key Findings:

80% of code in landed changes was fully AI-authored in the int32 to int64 migration, with “an estimated 50% time savings when compared to a similar exercise carried out without LLM assistance” when accounting for review and rollout.

87% of AI-generated code committed without modification in the JUnit3 to JUnit4 migration, converting 5,359 files and 149,000+ lines in 3 months, with review becoming the primary bottleneck rather than code generation.

89% estimated time savings in the Joda time migration, with engineers reporting that even when AI made errors, the initial version helped them “quickly identify all places and dependencies to update.”

LLMs require supporting infrastructure: Success required combining AI with AST-based file discovery, multi-stage validation (automated builds and tests), and iterative repair loops where the model fixed its own errors based on failure feedback.

Teams completed “complex migrations that were stalled for several years” and saved “hundreds of engineers worth of work,” with AI-powered migration changelists steadily increasing throughout 2024.

The application

Google’s experience shows AI-powered migrations can achieve 50%+ time savings and unblock stalled initiatives—but only when LLMs are part of a complete system including discovery, validation, and gradual rollout.

Engineering leaders should:

Combine AI with traditional tooling: Use AST-based techniques for file discovery and validation while leveraging LLMs for contextual transformations. Build reusable components that can be adapted across migration types rather than expecting simple prompting to work end-to-end.

Measure end-to-end time, not just code generation: Track the full migration timeline including discovery, review, and rollout. Set clear acceleration targets and remember that having an imperfect initial version still helps engineers identify what needs changing.

Plan for review and rollout bottlenecks: Code generation may accelerate dramatically, but reviewers can become overwhelmed. Google “purposefully limited the number of changes generated every week to avoid overwhelming reviewers.” Build reviewer capacity alongside your AI capabilities.

—

Have a wonderful week!

Lizzie

Oh wow this leaves me so torn. On the one hand, the Marie Kondo in me loves a legacy migration and this has me salivating. (I realize that I probably need to seek help)

But I do hope that enterprises (where legacy domain expertise has long, long left the building) take this with a grain of salt. Even if you have outstanding process, you still need relevant knowledge in order to do good reviews.