RDEL #126: How does building software by "vibe coding" change developer workflows?

Research shows developers spend over 20% of time waiting for AI and often "roll the dice" by repeating prompts without adding new information.

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

AI-powered coding tools have moved far beyond autocomplete—developers are now building entire applications through natural language prompts, with some never looking at the code at all. This shift, dubbed “vibe coding,” promises to democratize software development, but it also introduces uncertainty where even debugging becomes probabilistic. This week we ask: How do developers actually work when building software primarily through AI prompts, and what are the hidden challenges of this approach?

The context

The landscape of AI-assisted programming has evolved rapidly from line-by-line code suggestions to agentic systems that execute commands, edit multiple files, and run shell commands autonomously. Modern tools like Cursor, Windsurf, VZero, and Lovable now enable developers to build software primarily through conversation rather than code authorship—a practice called “vibe coding.”

Proponents celebrate its potential to democratize programming and accelerate prototyping, with some startups reporting that a quarter of their codebases are now almost entirely AI-generated. However, skeptics warn of security vulnerabilities and production incidents—including cases where AI agents deleted databases despite explicit instructions not to. With 84% of developers now using AI tools, understanding how practitioners actually engage with vibe coding becomes critical for engineering leaders.

The research

Researchers from UC Irvine, the University of Southampton, and industry conducted a grounded theory study analyzing 20 vibe-coding videos, including 7 live-streamed coding sessions (approximately 16 hours, 254 prompts) and 13 opinion videos, supplemented by detailed analysis of activity patterns and prompting strategies.

Top Findings:

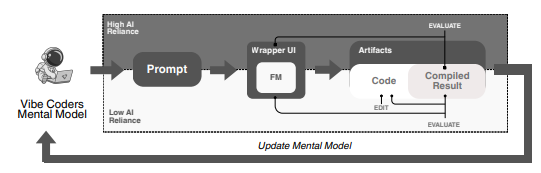

Vibe coding varies dramatically by expertise: Some coders relied entirely on AI without inspecting code, while others regularly examined outputs. High AI-reliance coders spent over 50% of session time waiting for generation and produced nearly 40% redundant prompts, while low-reliance coders inspected code and provided more prescriptive instructions.

Debugging becomes probabilistic, like “rolling the dice”: Coders with high AI-reliance sent up to 31 consecutive prompts containing only error messages, hoping the AI would eventually fix issues. Even feature refinement became stochastic, with previously working parts breaking unexpectedly.

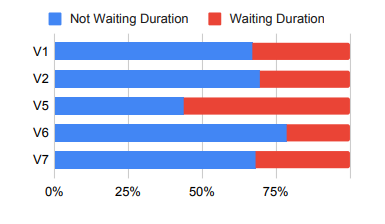

Over 20% of development time is spent waiting: Analysis revealed that more than 20% of session time was spent waiting for AI generation, with one session exceeding 50%. Developers coped by multitasking or sending parallel prompts, though this introduced conflicts requiring manual resolution.

Mental models shape effectiveness: Developers who inspected code provided specific prompts like “The focus-visible box shadow on the advanced options on the left...isn’t visible.. can you fix that?” Those who never examined code gave vague instructions and sometimes introduced errors.

Design fixation on first outputs: After issuing prompts like “Build a modern website UI,” vibe coders typically refined the first result rather than exploring alternatives, signaling potential design fixation that could lead to technical debt.

The application

The research reveals a fundamental tension in vibe coding: while it lowers the barrier to software creation, it shifts development from deterministic code changes to probabilistic outputs. This has significant implications for engineering teams—velocity gains may be offset by hidden costs like extended waiting time, increased debugging cycles from “rolling the dice,” and potential quality issues from developers who lack the mental models to evaluate AI-generated code effectively. Teams with mixed experience levels may see divergent outcomes, with junior developers particularly vulnerable to introducing errors or accumulating technical debt through design fixation on initial AI outputs.

To support teams adopting AI-powered development tools:

Maintain code literacy even when using AI agents. Developers with technical knowledge provided more effective prompts and caught issues that high AI-reliance coders missed. Encourage teams to periodically inspect generated code rather than treating it as entirely disposable.

Implement strong version control and testing guardrails. With AI making repository-wide changes that can break previously working features, establish practices like small incremental edits, frequent commits, and automated test suites—these are essential for reining in uncertainty at scale.

Factor waiting time into sprint planning. With over 20% of development time spent waiting for generation, the productivity gains from AI may be offset by latency, particularly for complex tasks requiring multiple iterations.

—

Lizzie

A hierarchy of tests are also useful. I wonder if teat driven development will become more mainstream because of agentic coding.