RDEL #111: How do developers collaborate with AI agents when solving real-world software engineering problems?

Developers using incremental collaboration strategies succeeded on 83% of issues, while one-shot approaches only achieved 38% success rates.

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

AI agents are rapidly evolving beyond simple code completion tools, now capable of autonomously performing complex software engineering tasks like debugging, testing, and implementing entire features. While these tools show promise on benchmarks, little is known about how developers actually work with them in practice or what collaboration patterns lead to success. This week we ask: How do developers collaborate with AI agents when solving real-world software engineering problems, and what factors determine their success?

The context

The landscape of AI-powered development tools has shifted dramatically from basic code completion to sophisticated agents that can understand entire codebases, execute terminal commands, and iteratively refine their outputs based on environmental feedback. These agents represent a fundamentally different paradigm from traditional AI coding assistants—they don't just generate code snippets, but can autonomously work through complex, multi-step development tasks while maintaining ongoing dialogue with developers.

However, this increased capability comes with new challenges. Unlike simpler AI tools that provide a single response, these agents make autonomous decisions that can affect entire codebases, potentially creating trust issues and increasing cognitive load for developers who must understand both the agent's reasoning and its modifications to their code. The question of how developers can most effectively collaborate with agents remains largely unexplored, leaving engineering leaders without clear guidance on how to integrate these tools into their team workflows.

The research

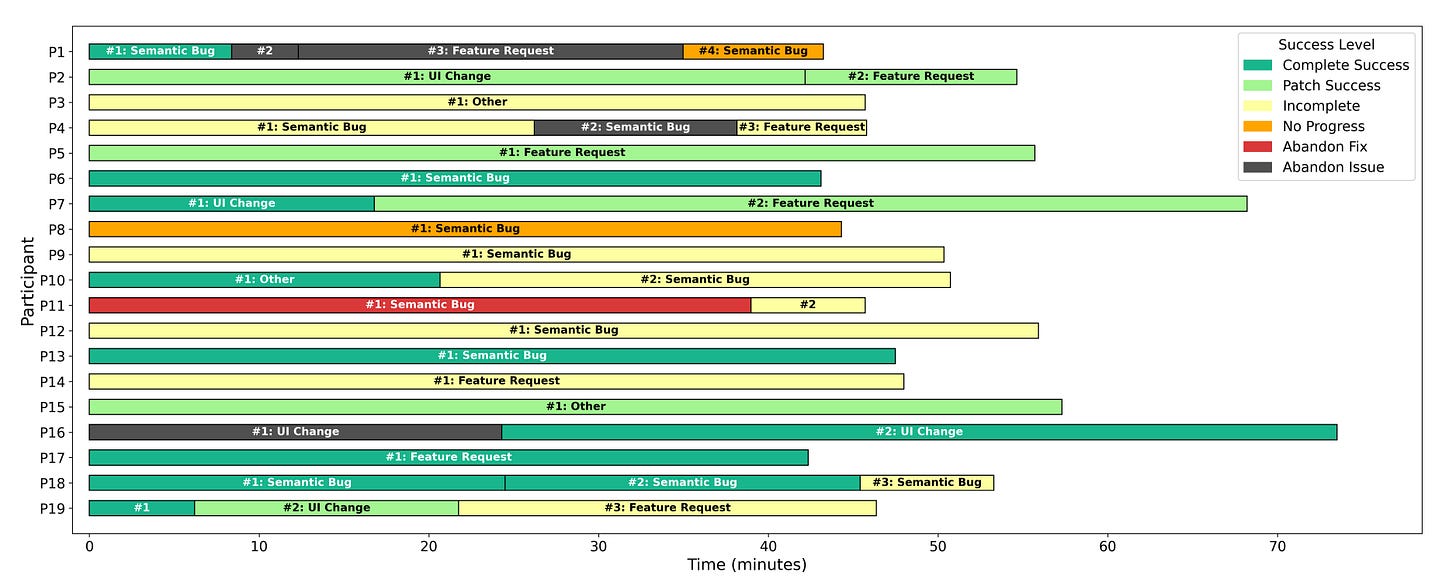

Researchers from Microsoft conducted an observational study with 19 professional software developers using Cursor to resolve 33 real open issues in repositories they had previously contributed to. The study involved video recording entire sessions while participants worked through GitHub issues, with researchers coding both participant actions and chat interactions to identify successful collaboration strategies.

The key findings include:

Incremental collaboration dramatically outperformed one-shot approaches: Developers who broke tasks into sequential sub-tasks and worked iteratively with the agent succeeded on 83% of issues, compared to only 38% success for those who provided the entire issue description at once and expected a complete solution.

Active developer participation was essential for success: Participants who provided expert knowledge to the agent succeeded on 64% of issues versus 29% for those who only provided environmental context, and those who manually wrote some code alongside the agent succeeded on 79% of issues compared to 33% who relied entirely on the agent.

Developers preferred reviewing code over explanations: Participants reviewed code diffs after 67% of agent responses but only reviewed textual explanations after 31% of responses, indicating a strong preference for direct code verification over trusting agent descriptions.

Communication barriers significantly hindered collaboration: The agent made unsolicited changes beyond the prompt scope in 38% of cases where participants didn't request code changes, and participants were nearly 3x more likely to stop the agent when it included unwanted terminal commands.

Trust issues emerged from agent inconsistency: Developers expressed frustration when agents immediately agreed with feedback rather than engaging in substantive discussion

"If I say that 'your change is wrong', it will revert that change. That makes me lose confidence in it." - Participant

The application

The research reveals that successful developer-AI agent collaboration requires active partnership rather than passive delegation. The most effective agent collaborations occurs when developers maintained continuous engagement, broke complex problems into manageable pieces, and contributed their domain expertise throughout the process rather than simply providing environmental feedback.

Engineering leaders can apply these findings in a few ways:

Promote incremental problem-solving approaches: Train developers to break complex issues into smaller sub-tasks and work with AI agents iteratively rather than expecting complete solutions from single prompts. This reduces the cognitive load of reviewing large changes and allows for earlier error detection and correction.

Encourage active developer participation in code generation: Rather than positioning AI agents as complete replacements for coding work, emphasize that the most successful collaborations involve developers writing code alongside the agent and providing expert knowledge throughout the process, not just at the end during review.

Establish guidelines for managing agent scope and autonomy: Create team standards for how to handle unsolicited changes, when to reset conversations, and when to stop agent execution. This will improve engineer-agent interactions and reduce the risk of agents derailing development work and eroding developer trust.

—

Happy Research Tuesday,

Lizzie