RDEL #109: How do AI-assisted code fixes impact code review time?

Meta’s study found reviewers slowed down by 6.7% when verifying AI fixes, while authors gained speed without extra burden

Welcome back to Research-Driven Engineering Leadership. Each week, we pose an interesting topic in engineering leadership and apply the latest research in the field to drive to an answer.

AI code assistants are rapidly becoming standard tools in software development, helping engineers write code faster and with fewer errors. But as these tools expand beyond code generation to assist with other parts of the development process, we're discovering unexpected challenges in how they impact team dynamics and workflows. This week we ask: how do AI-assisted code fixes impact code review time?

The context

Code reviews serve as a critical quality gate where experienced engineers examine proposed changes, suggest improvements, and share knowledge across teams. The process creates a natural mentoring opportunity but also represents a significant time investment - reviewers must carefully read code, identify issues, and write clear, actionable feedback.

When reviewers leave comments requesting changes, authors must then interpret those comments, implement fixes, and update their code. This back-and-forth can involve multiple rounds, taking time away from more complex work. The promise of AI assistance in this workflow is compelling: what if an AI could automatically generate the code fixes that address reviewer comments, saving authors time and reducing the review cycle? But this seemingly straightforward improvement raises an important question about responsibility and workflow design that wasn't obvious until tested at scale.

The research

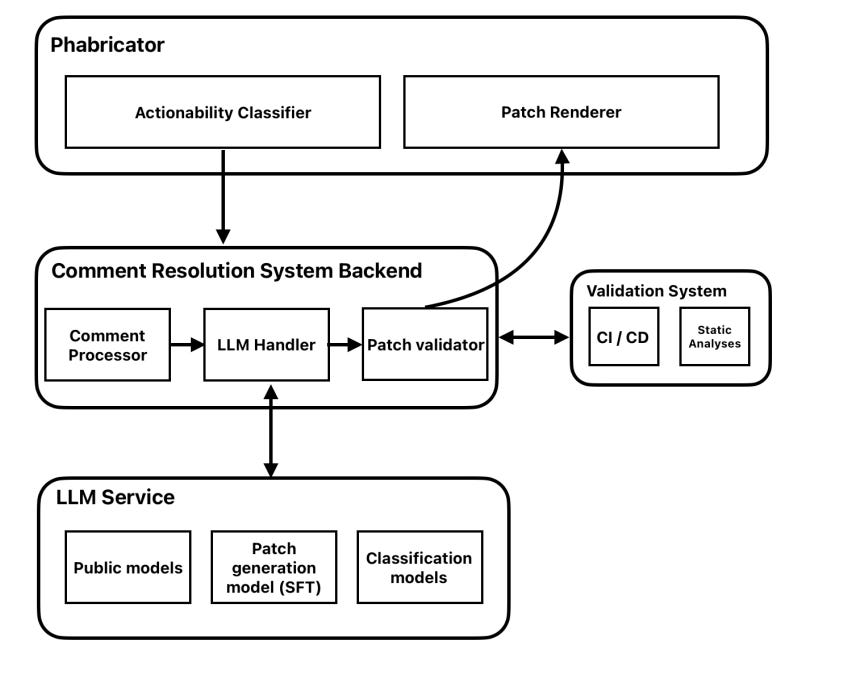

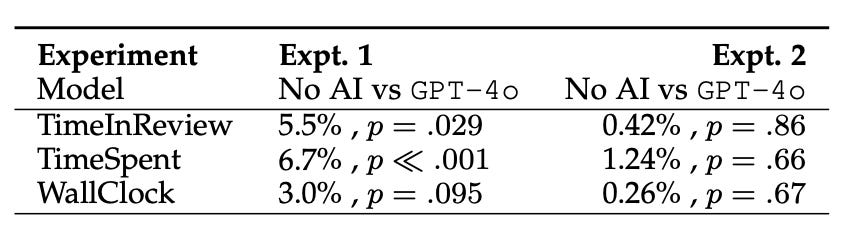

Researchers at Meta developed and deployed MetaMateCR, an AI system that automatically generates code patches to address reviewer comments, testing it with tens of thousands of engineers across four major programming languages. The study compared three models (GPT-4o, SmallLSFT, and LargeLSFT) through both offline evaluation on 206 manually-curated test cases, and production experiments with randomized controlled trials at Meta.

Key findings from the research:

Showing AI patches to reviewers increased review time - When reviewers could see the AI-generated fixes, they spent 6.7% more time actively examining diffs (p << 0.001), as they felt obligated to verify the AI's suggestions even though that wasn't their intended role. When the UI was modified to show patches only to authors (with reviewers able to expand if desired), review times returned to normal while maintaining the same acceptance rates

The best-performing model (LargeLSFT) achieved 68% exact match accuracy offline and 19.75% acceptance rate in production - This represents a 9.22 percentage point improvement over GPT-4o, achieved by fine-tuning on 64,000 internal code review examples

Internal context dramatically improved performance - While GPT-4o generated functionally correct fixes 59% of the time, it used outdated PHP functions instead of Meta's modern Hack APIs, highlighting the importance of training on company-specific codebases

Scaling training data from 2.9K to 64K examples improved exact match by 5 percentage points - Researchers used an AI classifier to expand the training set beyond manual labeling, demonstrating that volume can compensate for slightly lower quality in training data

The application

The research highlights that AI in code review is not a drop-in efficiency boost—it reshapes workflows and responsibilities. Reviewers who were shown AI fixes spent more time verifying them, while authors gained speed without added friction. Leaders can avoid unintended slowdowns by carefully scoping AI use, validating impact through trials, and tailoring models to their company’s codebase.Engineering leaders implementing AI code review assistants should:

Show AI suggestions only to those already responsible for that work - Authors should see fix suggestions, reviewers should see comment suggestions, avoiding new verification duties that slow down the process.

Run randomized controlled trials before full deployment - Meta caught a 5% slowdown in review time that wasn't apparent in offline testing, demonstrating why measuring actual impact on engineering velocity matters more than AI accuracy alone.

Invest in company-specific fine-tuning - The 9 percentage point improvement over GPT-4o shows that even sophisticated models miss critical context about internal APIs and coding patterns that determine real-world usefulness.

—

Happy Research Tuesday,

Lizzie

Interesting metric: fixing faster doesn’t always mean reviewing faster. Worth thinking through if you’re rolling out AI in your review loop.